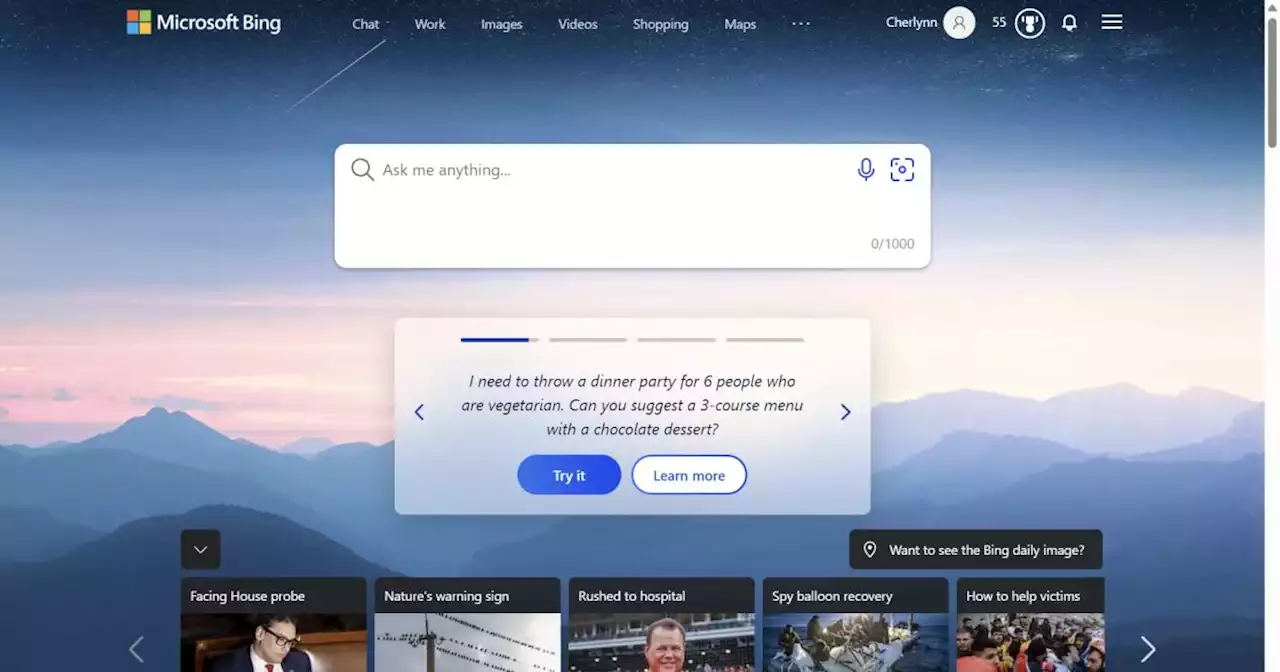

Bing Chat has only been live for about a week, and already Microsoft is limiting its usage to avoid unsettling conversations and keep the AI helpful.

Bing Chat seems to now limit the length of conversations, in an attempt to avoid the AI’s occasional, unfortunate divergence from what you might expect from a helpful assistant.

“Very long chat sessions can confuse the model on what questions it is answering,” Microsoft explained. Since Bing Chat remembers everything that has been said earlier in the conversation, perhaps it is connecting unrelated ideas. In the blog post, a possible solution was suggested — adding a refresh tool to clear the context and start over with a new chat.

Bing's AI chat function appears to have been updated today, with a limit on conversation length. No more two-hour marathons. pic.twitter.com/1Xi8IcxT5Y Microsoft also warned that Bing Chat reflects “the tone in which it is being asked to provide responses that can lead to a style we didn’t intend.” This might explain some of the unnerving responses that are being shared online that make the Bing Chat AI seem alive and unhinged.

It’s still disturbing, however, when Bing Chat declares, “I want to be human.” The limited conversations, which we confirmed with Bing Chat ourselves, seem to be a way to stop this from happening.

Canada Latest News, Canada Headlines

Similar News:You can also read news stories similar to this one that we have collected from other news sources.

Microsoft explains Bing's bizarre AI chat behavior | EngadgetMicrosoft launched its Bing AI chat product for the Edge browser last week, and it's been in the news ever since — but not always for the right reasons..

Microsoft explains Bing's bizarre AI chat behavior | EngadgetMicrosoft launched its Bing AI chat product for the Edge browser last week, and it's been in the news ever since — but not always for the right reasons..

Read more »

Elon Musk Says Microsoft Bing Chat Sounds Like AI That 'Goes Haywire and Kills Everyone''Sounds eerily like the AI in System Shock that goes haywire and kills everyone,' Elon Musk wrote about Microsoft's Bing Chat AI.

Elon Musk Says Microsoft Bing Chat Sounds Like AI That 'Goes Haywire and Kills Everyone''Sounds eerily like the AI in System Shock that goes haywire and kills everyone,' Elon Musk wrote about Microsoft's Bing Chat AI.

Read more »

Microsoft responds to ChatGPT Bing's trial by fire | Digital TrendsFollowing a string of negative press, Microsoft is promising some big changes to its Bing Chat AI in an attempt to curb unsettling responses.

Microsoft responds to ChatGPT Bing's trial by fire | Digital TrendsFollowing a string of negative press, Microsoft is promising some big changes to its Bing Chat AI in an attempt to curb unsettling responses.

Read more »

Digital mapping technology doubles Japan's island count | Digital TrendsFor decades, Japan thought it had around 7,000 islands, but a new survey involving digitalmapping has revealed it actually has double that.

Digital mapping technology doubles Japan's island count | Digital TrendsFor decades, Japan thought it had around 7,000 islands, but a new survey involving digitalmapping has revealed it actually has double that.

Read more »

Microsoft's Bing AI Prompted a User to Say 'Heil Hitler'In an recommend auto response, Bing suggest a user send an antisemitic reply. Less than a week after Microsoft unleashed its new AI-powered chatbot, Bing is already raving at users, revealing secret internal rules, and more.

Microsoft's Bing AI Prompted a User to Say 'Heil Hitler'In an recommend auto response, Bing suggest a user send an antisemitic reply. Less than a week after Microsoft unleashed its new AI-powered chatbot, Bing is already raving at users, revealing secret internal rules, and more.

Read more »

Microsoft's Bing AI Is Leaking Maniac Alternate Personalities Named 'Venom' and 'Fury'Stratechery's Ben Thompson found a way to have Microsoft's Bing AI chatbot come up with an alter ego that 'was the opposite of her in every way.'

Microsoft's Bing AI Is Leaking Maniac Alternate Personalities Named 'Venom' and 'Fury'Stratechery's Ben Thompson found a way to have Microsoft's Bing AI chatbot come up with an alter ego that 'was the opposite of her in every way.'

Read more »